Overfitting is on of the primary problems, if not THE primary problem in machine learning. There are many aspects to it, but in a general sense, overfitting means that estimates of performance on unseen test examples are overly optimistic. That is, a model generalizes worse then expected.

We explain two common cases of overfitting: including information from a test set in training, and the more insidious form: overusing a validation set.

Imagine a classroom. The teacher says to the students: “There will be a test tomorrow. The test will be about this and that. Let’s go over the subject now”. On the next day even the most half-witted pupils do well, because they can remember the answers from yesterday.

That’s an analogy for training on the test set, which is a newbie mistake. There is a deviant cousin of it, called label leakage. It happens when information from a validation or test set leaks into the training set in one form or another.

For example, let’s say you want to make a feature for classification derived from Naive Bayes: replace values in a categorical column with percentage of examples being positive for that feature. If you make a mistake of computing the percentages on all the examples with labels, including validation set, you’ve got leakage (even though you don’t train on validation examples).

Overfitting validation set

Now imagine the teacher announcing the test, but not giving away the questions. However, when the day comes, he says: “You will be assigned a question randomly. If you can answer, good. If not, you can draw another one. If you don’t feel like answering, draw another, and so on, until you find one you like.”

That’s overfitting the validation set. It happens when you try various settings and compare them using the same validation set. If you do this enough times, you will find a configuration with a good score. It often happens in competition settings, when people ovefit the leaderboard.

How many times can you use a validation set? That’s a good question. There’s a paper on this: Generalization in Adaptive Data Analysis and Holdout Reuse. Here’s a summary of the core algorithm and a podcast explaining this stuff.

Examples

In finance, people overfit when backtesting. Backtesting is a term for validation using historical time series. See Pseudo-Mathematics and Financial Charlatanism: The Effects of Backtest Overfitting on Out-of-Sample Performance for more.

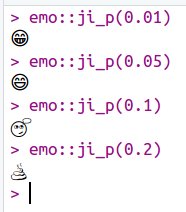

In statistics, overfitting is known as p-value hacking. It happens when a scientist tries various experiments until he finds one with statistical significance, as measured by the infamous p-value. That’s one of the reasons why we have “data science” now: statistics needed a fresh start.

R package emo

Then there’s overfitting in TV sports commentary, where pundits have access to various statistic trivia. If you watch sports, you have heard things like this:

Sports journalists must have p-hacking tools that put scientists to shame. Source: @talyarkoni

Finally, some plots. It is plain to see that the relation between these two can not be a coincidence:

If you live in Maine, USA, and you want you marriage to last, be very wary of margarine:

Source: Spurious correlations

Overfit automatically

Things get worse when you automate trying different configurations, as in hyperparameter tuning. Especially with a random or grid search. The “intelligent” variants, for example Bayesian methods, zero in on regions of search space which seem to be consistently good. This reduces the problem.

Random search hops all over the board, and the longer it runs, the more probable it is to find a spurious lucky configuration. We’ve witnessed it firsthand with Hyperband. So maybe curb your enthusiasm for random hyperparam search.

For more ways to overfit, see Clever Methods of Overfitting by John Langford (2005!).