Some people still post about the LLMs’ inability to tell how many particular letters are in a given word. Let’s take a look and try to understand the basic issue here.

This shortcoming comes from tokenization. Large language models don’t see text as letters, they see it as tokens. A token can correspond to a single word, a syllable, a phrase, or a single character.

Seeing is believing, so take a look at how Llama 3, Anthropic, and OpenAI tokenize texts.

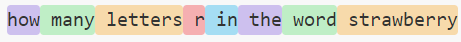

Llama 3:

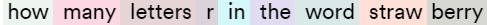

Anthropic:

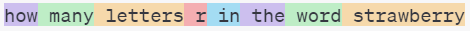

OpenAI:

For an analogy, imagine asking how many letters E are in the word 77. This question makes no sense. Only if you expand “77” into “seventy seven” you can see that there are four letters E. LLMs can’t do that.

What can LLMs do

One way an LLM would be able to answer the how many letters questions is if it saw a sentence saying “the word XYZ has three letters A”, either during training, or maybe in text pulled with RAG at the answering time.

Also, if a model has access to tools, for example a Python interpreter, it can figure it out by writing code. For example, claude-3.5-sonnet in Cursor provides the code without being asked to do so.

To determine how many times the letter ‘a’ appears in the word “abracadabra”, we can use a simple Python script:

word = "abracadabra"

count = word.count('a')

print(f"The letter 'a' appears {count} times in '{word}'.")

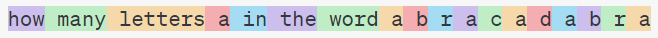

If you want to give a model a fair chance with the letters, separate them with spaces so that each letter is a token.

Another answerable question would be, how many tokens are in the word “strawberry”, as an LLM should be able to answer this. However, that is should doesn’t mean that it will.

You can try asking a model something like “in your internal representation as an LLM, how many tokens does the word XYZ consist of?”

This could potentially provide clues about models of unknown identity. For example, the perplexity.com chatbot says the word “strawberry” is primarily represented as a single token in my vocabulary, which would suggest that it’s Llama. However, it also says the word “abracadabra” is a single token, which is unlikely.