TensorFlow is a new deep learning library from Google. Immediately after release it became the most starred deep learning package on GitHub. From the hype one could conclude that TensorFlow is the best thing since sliced bread. Is it?

TensorFlow vs Theano

The first thing to realize about TensorFlow is that it’s a low-level library, meaning you’ll be multiplying matrices and vectors. Tensors, if you will. In this respect, it’s very much like Theano.

For those preferring a higher level of abstraction, Keras now works with either Theano or TensorFlow as a backend, so you can compare them directly. Is TF any better than Theano? The annoying compilation step is not as pronounced. Other than that, it’s mostly a matter of taste.

UPDATE: Google released Pretty Tensor, a higher-level wrapper for TF, and skflow, a simplified interface mimicking scikit-learn.

Need for speed

Now for the elephant in the room… Soumith’s benchmarks suggest that TensorFlow is rather slow.

And this:

@kastnerkyle any idea how to make it run faster or optimise grads? for pure cpu, the javascript version of mdn runs faster than tensorflow!

— hardmaru (@hardmaru) November 26, 2015

MDN in the tweet stands for mixture density networks.

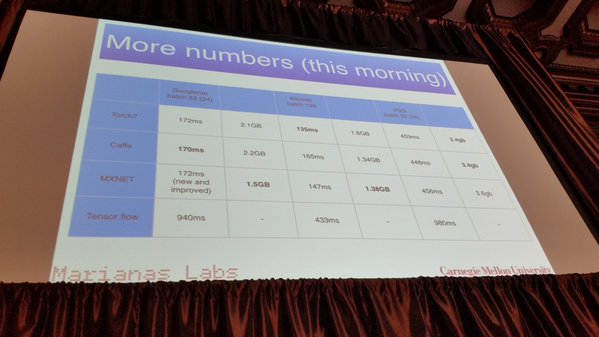

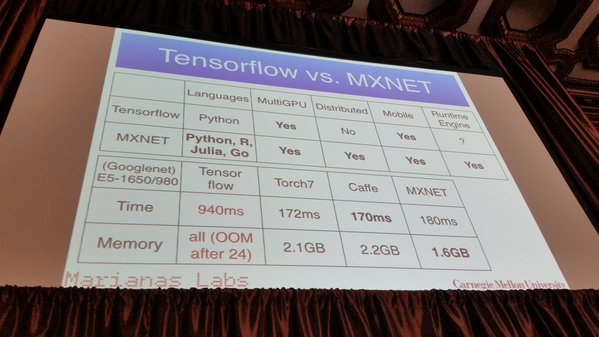

And Alex Smola’s numbers posted by Xavier Amatriain:

As you can see, at the moment TensorFlow doesn’t look too good compared to popular alternatives.

Distributed? Later.

What’s really interesting about the library is it’s purported ability to use multiple machines. Unfortunately, they didn’t release this part yet. No wonder, distributed is hard. Jeff Dean explains that it’s too intertwined with Google’s internal infrastructure, and says distributed support is one of the top features they’re prioritizing.

MXNet

If you want software that is faster and works in distributed setting now, check out MXNet. As a bonus, it has interfaces for other languages, including R and Julia. The people behind MXNet have experience with with neural networks, distributed backends, and have written XGBoost, probably the most popular tool among Kagglers.

Conclusion

To sum up, Google released a solid - but hardly outstanding - library that captured a disproportionately large piece of mindshare. Good for them, fresh hires won’t have to learn a new API.

For more, see the Indico machine learning teams take on TensorFlow, and maybe TensorFlow Disappoints.