The last few weeks have been a time of neural nets generating stuff. By deep nets we mean recurrent and convolutional neural networks, while the stuff is text, music, images and even video.

Text and music

It all started with Andrej Karpathy’s blog post on recurrent neural networks generating text, character by character. This is by no means a new idea - it goes back to 2011 paper by Sutskever, Martens and Hinton on Generating Text with Recurrent Neural Networks. See Ilya Sutskever’s page for a PDF, video talk and code. He even set up an online demo, although it’s not very impressive by today’s standards.

Anyway, Andrej has written a very lucid explanation, provided a few examples, and posted his char-rnn Torch code on GitHub. Samim employed it to create synthetic Obama speeches and TED talks.

UPDATE: See a couple of RNN-generated TED talks. The clip starts with demonic synthetic voice and Juergen Schmidhuber in the video. RNN decides when the audience laughs.

Other people used the network to compose Mozart style music and Irish folk music. The folk music gets the rhythm and harmony straight and for unsuspecting listener might well pass for a human work.

Note that these attempts worked on musical symbols. Another way is to feed raw audio to a recurrent net and some Stanford students did just that. You’ll find the paper, GRUV: Algorithmic Music Generation using Recurrent Neural Networks, among CS224d class reports, and an accompanying video on YouTube. We found the output akin to an old, half-tuned-in radio skipping between stations - but the music sounds very real.

All of the mentioned endavours involve recurrent neural networks (mostly their variants with better memory - LSTM and GRU), which are particularly well suited for modelling sequences. Now, let’s turn to convnets, which are good for…

Images and video

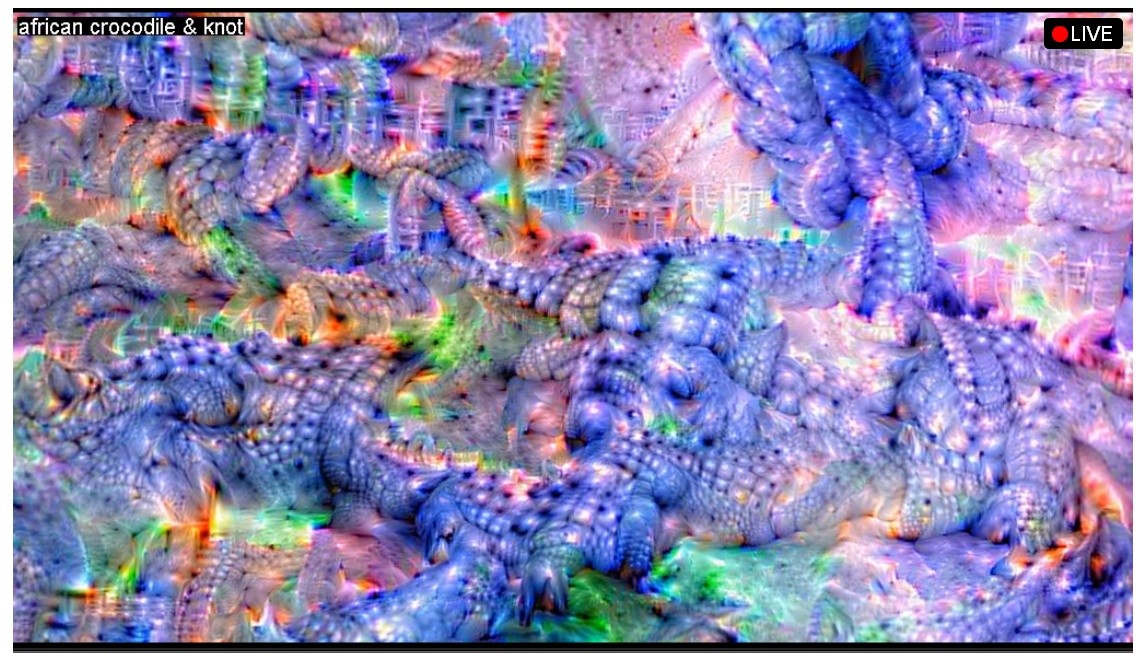

So far, the most common task for CNNs was object recognition in images. In June, Google made a big splash by showing a few pictures created by an unspecified neural network (details are even scarcer than usual - no paper so far, let alone any code or live demo). The authors call the technique inceptionism. The images share a somewhat nightmarish quality - one commenter had this to say:

It seemed to be a sort of monster, or symbol representing a monster, of a form which only a diseased fancy could conceive. If I say that my somewhat extravagant imagination yielded simultaneous pictures of an octopus, a dragon, and a human caricature, I shall not be unfaithful to the spirit of the thing. A pulpy, tentacled head surmounted a grotesque and scaly body with rudimentary wings; but it was the general outline of the whole which made it most shockingly frightful.

Now take a deep breath and look at it:

The monster has become known as “puppyslug”. But don’t be scared, some creations are way prettier:

More at the inceptionism gallery. Also see this short video.

UPDATE: Google has released its inceptionism code under the name deepdream. Simultaneously with Google, J.C. Johnson from Stanford made available his implementation of inceptionism, cnn-vis.

Deepdream can produce a few distinct styles of images. What you see most often among people’s creations is dogs, due to a large number of said animals in ImageNet. If you want more variety, see the section on the LSD network below.

The community has embraced deepdream wholeheartedly. There are:

- deepdream Reddit thread

- dreamdeeply.com - a site where you can upload your image

- bat-country - a pip-installable version (a few nice examples)

- clouddream - a dockerized version

- DeepDream Animator for making videos

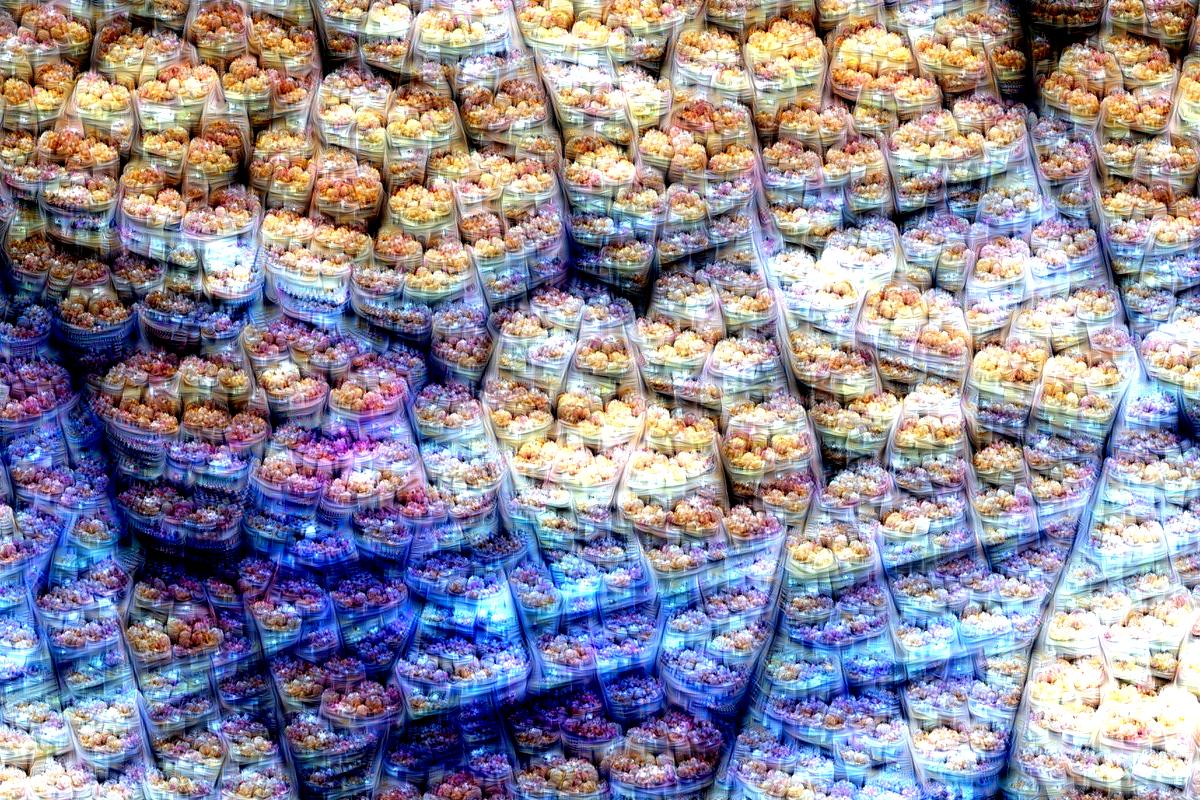

Ayahuasca girls. Credits: reddit; original image

Deep Stereo

There is a practical side to hallucinating views: one can take a few images and interpolate between them, creating a video. This is described in the DeepStereo: Learning to Predict New Views from the World’s Imagery paper and the YouTube clip shows how it works. While not as entertaining as the inceptionism art, imagine how this method could improve Street View in Google Maps.

EyeScream

Other researchers didn’t stay behind. Soumith Chintala from Facebook came up with a method of creating realistically-looking images. It’s called Eyescream and the Torch code is on GitHub.

LSD Neural Network

The project we find most impressive is the Large Scale Deep Neural Network, created by Jonas Degrave, Sander Dieleman and friends. It’s a convnet running in reverse: instead of producing labels from images, it makes images from labels. This is different from inceptionism, where they use normal pictures as input to the net, which only modifies them.

You owe it to yourself to watch LSD NN on Twitch. People suggest classes from ImageNet in the chat and the net dreams about them. Even better, you can suggest a combination of two categories, which makes for some stunning visuals.

UPDATE: The authors have released Theano/Lasagne-based code.

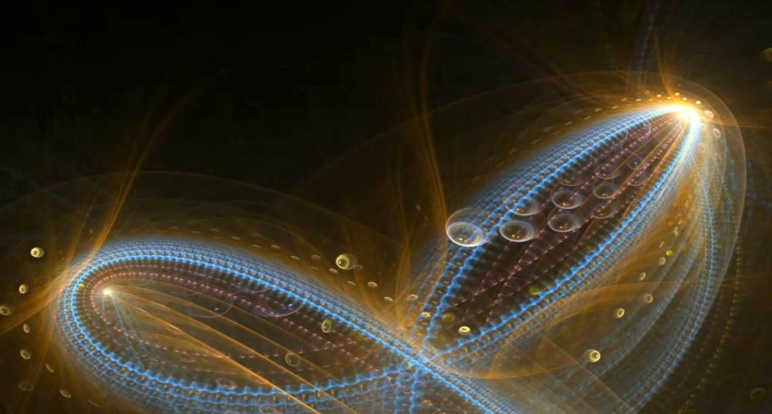

Electric sheep

If all this whetted your appetite for abstract eye candy, take a look at electric sheep. They are fractal videos, a bit similiar to the LSD network output, and can run as a screensaver. The generating engine is called FLAM.

Interestingly, the sheep are bred to be attractive. Viewers indicate which sheep they like and this input is fed to a genetic algorithm, aiming to create even better offspring. Here are some screenshots; if you are a returning reader, you may recognize one or two images:

Google has more.