After testing CUDA on a desktop, we now switch to a Linux laptop with 64-bit Xubuntu. Getting CUDA to work is harder here. Will the effort be worth the results?

If you have a laptop with a Nvidia card, the thing probably uses it for 3D graphics and Intel’s built-in unit for everything else. This technology is known as Optimus and it happens to make things anything but easy for running CUDA on Linux.

The problem is with GPU drivers, specifically between Linux being open-source and Nvidia drivers being not. This strained relation at one time prompted Linus Torvalds to give Nvidia a finger with great passion.

Installing GPU drivers

Here’s a solution for the driver problem. You need a package called bumblebee. It makes a Nvidia card accessible to your apps. To install drivers and bumblebee, try something along these lines:

sudo apt-get install nvidia-current-updates

sudo apt-get install bumblebee

Note that you don’t need drivers that come with a specific CUDA release, just Nvidia’s proprietary drivers.

Now, usually you don’t log in as root, but you need to run bumblebee’s app, optirun, as root:

optirun --no-xorg <your_cuda_program>

The easiest way to do this is to execute sudo su to get a superuser’s shell. That’s because (on Ubuntu) sudo has restrictive security measures that complicate the setup.

Your CUDA apps will need a proper environment, including PATH, LD_LIBRARY_PATH etc., so set them up either in root’s .bashrc or system-wide. There’s /etc/environment, but it’s not a real shell script so we don’t like it. See the documentation for details.

To sum up, we set an alias we then use to execute commands via optirun:

alias opti='optirun --no-xorg'

opti python some_cuda_app.py

Timing tests

We’ll run the same tests as in Running things on a GPU, to see how timings compare. The processor is much faster and the card bit slower than previously: the test laptop has an Intel i7-3610QM CPU and a Nvidia GeForce GT 650M video card.

The CPU has four cores, each with two threads, for a total of eight. Unfortunately Python uses only one thread.

Cudamat

For Cudamat, we use rbm_numpy.py and rbm_cudamat.py examples, as before.

- CPU: 95 seconds per iteration

- GeForce GT 650M: 14 seconds (nearly seven times faster)

Theano / GSN

The output snippets for CPU and GPU, respectively:

1 Train : 0.607189 Valid : 0.366880 Test : 0.364851

time : 541.4789 MeanVisB : -0.22521 W : ['0.024063', '0.022423']

2 Train : 0.304074 Valid : 0.277537 Test : 0.277588

time : 530.5445 MeanVisB : -0.32569 W : ['0.023881', '0.022516']

1 Train : 0.607192 Valid : 0.367054 Test : 0.364999

time : 106.6691 MeanVisB : -0.22522 W : ['0.024063', '0.022423']

2 Train : 0.302400 Valid : 0.277827 Test : 0.277751

time : 258.5796 MeanVisB : -0.32510 W : ['0.023877', '0.022512']

3 Train : 0.292427 Valid : 0.267693 Test : 0.268585

time : 258.4404 MeanVisB : -0.38779 W : ['0.023882', '0.022544']

4 Train : 0.268086 Valid : 0.267201 Test : 0.268247

time : 258.1599 MeanVisB : -0.43271 W : ['0.023856', '0.022535']

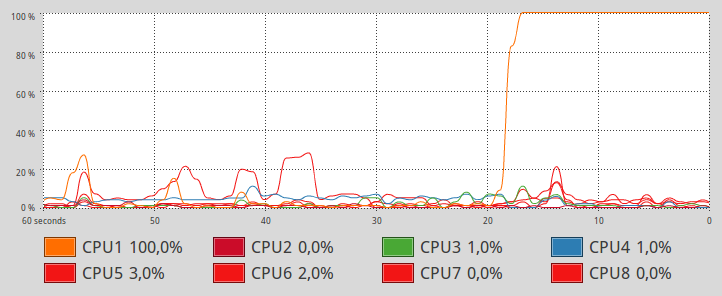

For some reason, on the GPU the first iteration goes way faster than the next. Those next iterations are only two times faster compared to the CPU.

A possible explanation is throttling: when hardware gets too hot, it slows down. It’s especially likely to happen when both CPU and GPU are under load. And the 600 series GeForce cards are CPU-intensive.

To sum up, it seems that on a laptop, a good strategy would be to employ all CPU cores. That would make using a GPU unnecessary.