For the last year or so, OpenAI was the main provider of the drama in the AI world. Now there’s a new player, although it shapes to be a one hit wonder. Here’s a short overview of what happened.

On Thursday September 5, an individual by the name of Matt Shumer announced that he had the top open source LLM of the world. The benchmark scores suggested the 70B model was better than Llama 405B and on par with the leading commercial LLMs. How? By using ”reflection-tuning, a technique developed to enable LLMs to fix their own mistakes”.

If too good to be true benchmark scores didn’t ruffle your feathers, the next dissonance came when the model uploaded to HuggingFace didn’t work for some mysterious reason. Matt hurried to explain and said they were “re-training” the model.

oh husbant, you uploaded the wrong weights to huggingface and now we are homeress. pic.twitter.com/LTGG7sZeeQ

— sankalp (@dejavucoder) September 9, 2024

Then they uploaded a “new” version, apparently trained for three epochs instead of two, and people have discovered that the new version is exactly the same as the old one, although split into parts differently, so that it wouldn’t be obvious that the models are the same.

Various people have tried to replicate the benchmark results and consistently found out that they couldn’t.

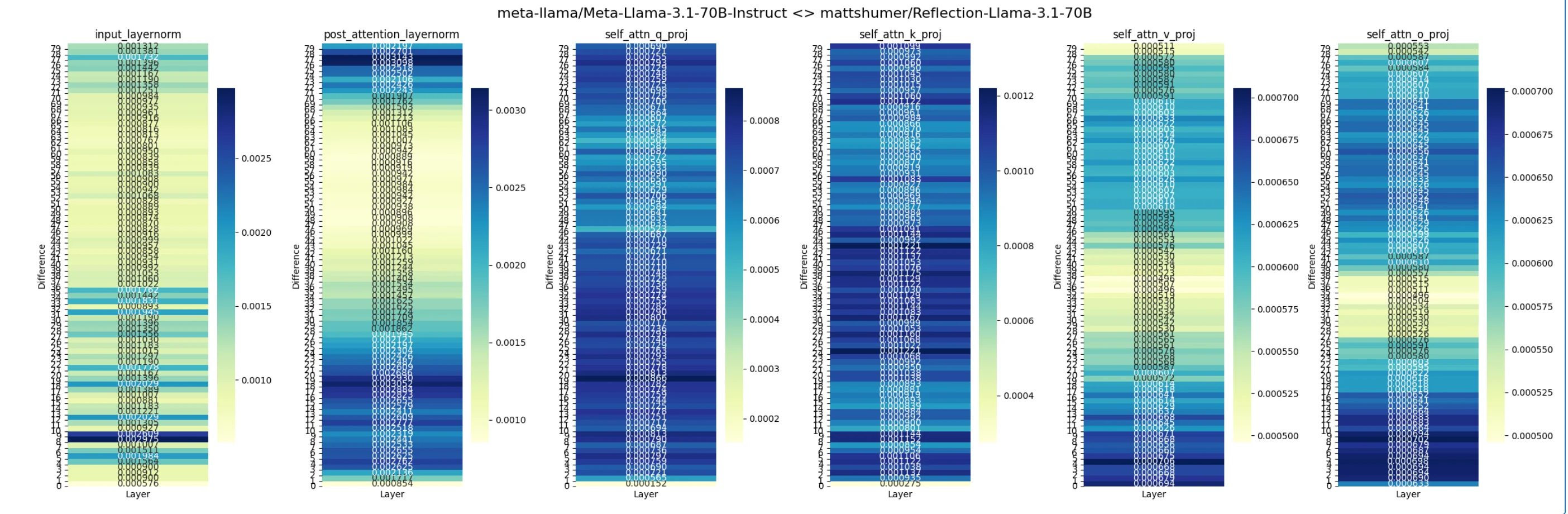

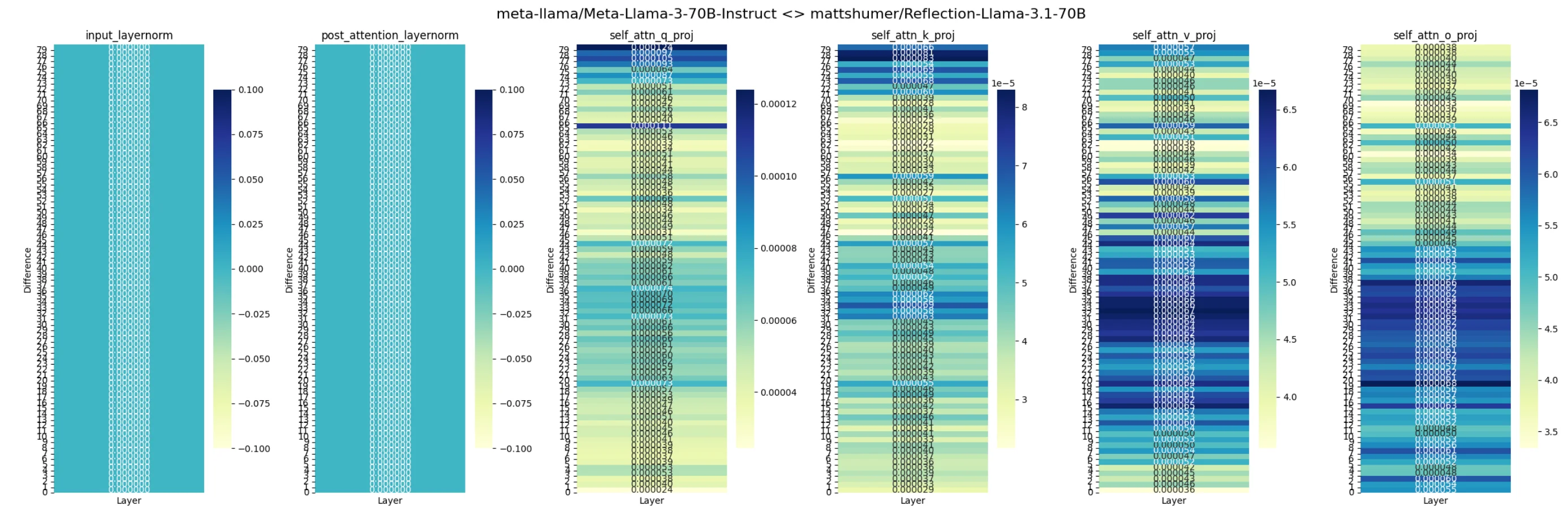

The 70B model is just a worse version of Llama 3 70B, as these diff images show.

r/LocalLLaMA: Reflection-Llama-3.1-70B is actually Llama-3.

Besides the uploaded weights, the model has been accessible through an API. The results from the 70B model and from the API are different. In other words, the model uploaded to HF and the model serving the API are different.

Moreover, people have discovered that the model serving the API is just Claude with a custom system prompt. Then Matt apparently changed it to GPT-4o to shake off the pursuers.

Here’s a Twitter thread with a summary of it all.

Long story short, apparently it’s all fake. The question on people’s minds is WHY. The probable answers are either some kind of an attempt at money grab, or plain stupidity, or trolling, or maybe they had just concepts of a plan. Time will probably tell.

As of now, Matt says they have a team working tirelessly to understand what happened and will determine how to proceed once we get to the bottom of it. Once we have all of the facts, we will continue to be transparent with the community about what happened and next steps.

Yes, by all means, continue to be transparent.