This is a high-level review of what’s been happening with large language models, to which we’ll also refer as the models, or chatbots. We focus on how expanding the context length allowed the models to rely more on data in prompts and less on the knowledge stored in their weights, resulting in fewer hallucinations.

Progress

The year 2023 felt like a moon landing year for LLMs. Since then, things have slowed down a bit. We’re not going to Mars. New models arrive, but the progress is incremental, not revolutionary. It feels slow. Going into heavy prompt engineering to achieve better results is a grind, and the price in compute is hefty. It is not a road to superintelligence, it’s plowing a field with an ox.

Once you train on the whole internet, where do you get more data? To what extent does synthetic data work? Apparently Meta used some synthetic data in training Llama 3, which at 405B parameters is the largest open source model so far.

A notable problem that was quite acute a year ago and has been solved since concerns context length - the amount of text a model can process at once. A year ago, most models had relatively small context windows, in the order of a few thousand tokens. With the context length of 512, 1024, or 2048 tokens popular earlier, you could barely fit in the chat history, not to mention any additional data. Now it’s hundreds of thousands, or millions (if you have the hardware for it). We’ll get to the consequences shortly.

Usage

Some people seem to believe that large language models, or AI, if you want to be more vague, will change everything, like the internet changed everything. The evidence is much more modest. Currently, LLMs are mostly useful for basic (re)search and for programming assistance.

For example, one can go to a site like perplexity.ai, which combines a chatbot with a search engine. Before, you would click the first few search results to research a topic. Now the chatbot ingests and summarizes them behind the scenes. This is known as RAG (Retrieval Augmented Generation). Instead of relying on knowledge acquired during training, a model processes information retrieved from elsewhere and given in the prompt. This mode of operation has become more alluring with growing context length.

Even with RAG some models often deliver half-truths or completely wrong answers. Still, it’s pretty convenient, especially with the very apparent decline of Google’s search quality. In short, around 2019 Google basically said “having good search results is nice, but you know what’s nicer? Having bad search results and people clicking ads instead.”

How did that turn out for them? Paraphrasing Churchill, they chose shame, and then had war thrown in a little later on.

Coming back to LLMs, another area where they do well is coding help, with bigger context windows similarly advantageous. As long as you manage your expectations, the models can write small snippets of code, which you’ll have to check for correctness, of course. Perhaps more interestingly, LLMs can read and explain code, as well as scientific papers - with the same reservation that you have to fact-check everything.

Nature

To think realistically about large language models, it is helpful to understand some basic facts about their nature, stemming from the way people train them. We will focus on two aspects: hallucinations and mediocrity.

Language models are trained to predict the next token in a sequence. In that process there are absolutely no provisions for fact checking at all. Therefore, when you ask something, the model will respond, and if it doesn’t know the answer, it will make something up. In our opinion, this is the biggest and the most fundamental problem with LLMs. Finetuning and prompt engineering will only get you so far with managing hallucinations, because hallucinating is the very nature of chatbots.

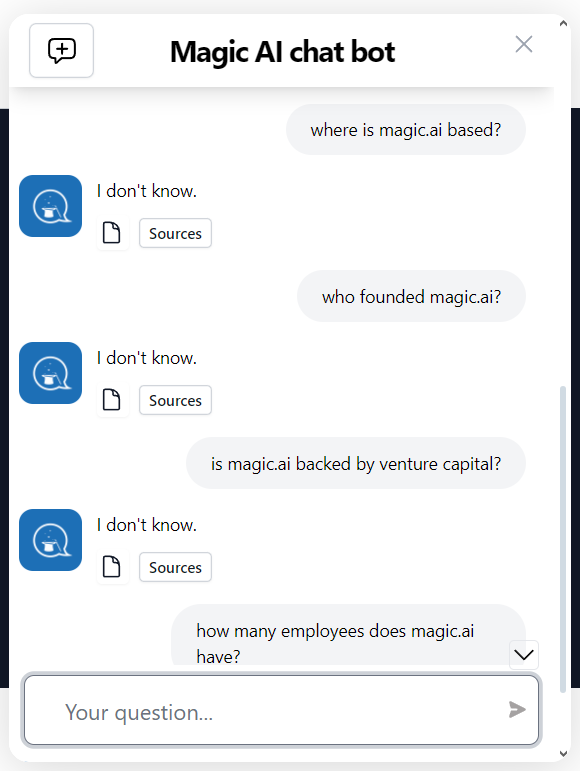

However, with RAG you can instruct a model to strictly stick to the data in front of them (in the prompt), for better or worse.

Magic.ai chatbot doesn’t know.

Mediocrity also results from pretraining, almost by definition. Basically, people LLMs on the whole internet, and when you consume everything available, it will be low-brow on average. What goes in, comes out.

The practical consequence is that if your knowledge about a topic is below average, a chatbot is likely to be able to help you with it, and vice versa. It might lift you up, but only to a mediocre level. Again RAG can improve things if the model has access to the right data.

AI-assisted programming nicely reflects both the aspects, hallucinations and mediocrity. According to the Devs gaining little, if anything, from AI coding assistants article and the comments on Reddit, the main outcome seems to be more bugs, not increased productivity. The hopes for 10x software engineers and autonomous coding agents haven’t materialized.

The above average coders won’t gain much from AI assistance, the below average coders will be able to churn out more slop. “It’s time to build!”, they used to exclaim in unison after the OpenAI dev day. A year later, these voices are strangely silent. What have you built, friends? Come show us.

However, don’t feel bad using AI assistance for coding. Being below average is localized. Everybody has gaps in their knowledge. For example, let’s say we have two sets of n vectors, and we want to compute distances from every vector in one set to every vector in the other set. There is a dedicated function for that in scipy: cdist. Most people will probably never need it anyway. We didn’t know about it until a chatbot told us. Thanks, chatbot!